Steps:

- Spring Batch Read From File And Write To Database From Format

- How To Create A File And Write To It

- Python Create File And Write To It

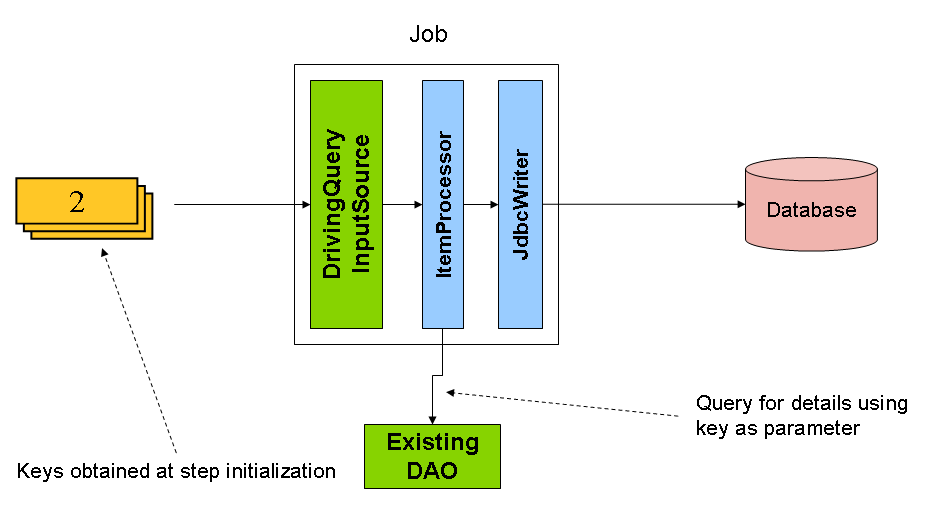

- Read 'Select x, y, z from TABLE_1' from Database1 into a ResultSet.

- pass ResultSet to a Writer

- Write all records returned by the ResultSet to TABLE_2 in Database2.

An Item Reader reads data into the spring batch application from a particular source, whereas an Item Writer writes data from Spring Batch application to a particular destination. An Item processor is a class which contains the processing code which processes the data read in to the spring batch.

Requirement:

- Do not create any unused Objects to hold the data after reading from the ResultSet. (i.e. no Table1.class)

- Use as much pre-built functionality as possible from the SPRING-Batch framework.

- No DB Link.

NOTE: Class names for me to reference are enough to get me on the right path.

2 Answers

assuming you use JdbcPagingItemReader and JdbcBatchItemWriter you can use:

- the ColumnRowMapper from spring-jdbc

- an self implemented ItemSqlParameterSourceProvider

Your wish to save on memory allocations are clear but think twice if your desire for maximum optimization is worse side effects and problems.

First of all, if you just want to read rows from table A and write them to table B without any transformation of the data, then Spring Batch is not the best choice. You wish to use Spring Batch in this scenario perhaps if you want to retry (using RetryTemplate) in case some exception occurred during writing, or you want to skip certain exceptions (e.g. DataIntegrityViolationException = ignore duplicate entries).

So what you can do (but that is not very good approach) is to use Flyweight objects, e.g. the object that you return to framework is always the same, however it is each time filled with new contents (the code is not tested, AS IS):

Spring Batch Read From File And Write To Database From Format

Be aware about this approach: it works only if your batch size is 1 (otherwise you end up with N equal objects in a batch) and only if your reader has prototype scope (because it is stateful).